The Use of Student Perceptual Data as a Measure of Teaching Effectiveness

Jackie Burniske, Debra L. Meibaum

Introduction

States are carefully reviewing their teacher evaluation systems to make them more meaningful and comprehensive by including multiple measures. This represents a dramatic change from the traditional use of classroom observation by a school administrator as the primary means of evaluating a teacher. Multiple measures of teaching effectiveness are being added to legislation, policy, and practice across the country. This paper looks at one of those multiple measures—the use of student perceptual data to inform a performance-based teacher evaluation.

"What we also found that has not been discussed in previous literature is that there was a great deal of agreement about the characteristics of good teachers across varying educational communities. That is, second graders, preservice teachers, and inservice teachers seem to agree easily on the requisite characteristics for being a good teacher…"

(Murphy, Delli, & Edwards, 2004, p. 87)

Procedures

To identify studies on the use of student perceptual data for teacher evaluation, staff from the Southeast Comprehensive Center (SECC) and the Texas Comprehensive Center (TXCC) conducted searches of EBSCO’s Academic Search Elite database, ERIC, and online search engines (i.e., Google, Google Scholar, Bing, and Yahoo). The staff used combinations of terms that included "teacher evaluation," "teacher performance," "teacher practice," "performance-based," "alternative measures," "student surveys," "student feedback," "student ratings," "student perceptions," "student opinions," "classroom," and "instructional environment."

Names of specific models, projects, and experts identified in the initial literature search were also used as search terms: "Tripod Survey," "Harvard researcher Ron Ferguson," "Cambridge Education," "halo effect," "Gallup Student Poll," "MET Project," "Davis School District, Utah," "Experience Sampling Method," "Memphis Teacher Effectiveness Initiative," "Quality Assessment Notebook," "School Performance Framework," "Teacher Behavior Inventory," and "Teaching as Leadership."

Information was also obtained from national content centers and, when appropriate, directly from recognized experts on teacher evaluation. In addition, state and district websites were searched to gather information about current practices.

The literature searches focused on research conducted within the last 10 years. However, when reference lists were reviewed, staff found that some older research provided key information on the topic. Therefore, these seminal publications were included in the resources used to develop this paper.

Limitations

The goal of this briefing paper is to provide decision makers and stakeholders with information on the use of student perception surveys in teacher evaluation systems. It is hoped that this would assist them in weighing options and making informed recommendations to local education agencies. In researching the topic, after reviewing available resources the authors conducted telephone interviews with national content center staff and contacted national experts from relevant areas within the field of educational research. Information obtained from these non-peer-reviewed sources has been incorporated into this briefing paper.

It must be emphasized that the selected tools featured herein do not include all student perception surveys that are in use. SECC and TXCC staff focused on tools that have been reported as adequate by researchers, organizations, and assessment experts. Hence, the authors make no judgment on the technical soundness (e.g., reliability, validity) of identified measures. In addition, decision makers should recognize that the selected information in this report is not inclusive of all available resources on the topic of student perception surveys as a means of teacher evaluation. Rather, it reflects what could be compiled within the imposed time constraints. Inclusion of programs, processes, or surveys within this paper does not in any way imply endorsement by SEDL or its comprehensive centers.

Historical Perspective

Solicitation of student perceptions regarding their teachers has a long history in the United States—in 1896 Sioux City, Iowa students in grades 2–8 provided input on effective teacher characteristics (Follman, 1995). In reviewing 154 articles published over a 74-year time period, Aleamoni (1999) identified 16 myths regarding student ratings of their instructors. Follman (1992, 1995) conducted empirical literature reviews of public elementary and secondary students’ teacher effectiveness ratings. Goe, Bell, and Little (2008) included student ratings as one of seven methods of measuring teacher effectiveness.

Benefits and Limitations of Student Surveys

Research indicates that there are both benefits and limitations to using student surveys in teacher evaluations, as is true with many evaluation measures. In the table below, findings from research on using student surveys as a measure of teacher effectiveness are grouped into five major categories: 1) use of students as raters, 2) discrimination between teaching behaviors and student/teacher interactions, 3) reliability and validity of student ratings, 4) impact of student demographics, and 5) use of student ratings. Multiple sources often report similar findings. In making decisions on the use of student surveys as a component of a comprehensive teacher evaluation system, policy makers may benefit from considering both the benefits and the limitations.

Benefits and Limitations of Student Surveys

- Students as Raters

- Behaviors and Interactions

- Reliability and Validity

- Impact of Student Demographics

- Use of Student Ratings

Use of Students as Raters

Benefits

Students have extensive daily contact with teachers, resulting in unique perspectives and ratings of teacher behaviors (Follman 1992, 1995; Peterson, Wahlquist, & Bone, 2000; Worrell & Kuterbach, 2001).

Students are the direct recipients of instruction and have more experience with their teachers than other evaluators (Follman, 1992).

Student ratings are consistent from year-to-year (Aleamoni, 1999).

Student responses distinguish between teachers; students may rate one teacher high and another low, based on the quality of teaching the student experiences (Ferguson, 2010).

Limitations

Student raters have a lack of knowledge of the full range of teaching requirements and responsibilities, such as curriculum, classroom management, content knowledge, and professional responsibilities (Follman, 1992, 1995; Worrell & Kuterbach, 2001; Goe et al., 2008).

Discrimination between teaching behaviors and student/teacher interactions

Benefits

Students discriminate between effective teaching behaviors and warm, caring, supportive teacher/student interactions (Peterson et al., 2000; Aleamoni, 1999).

Secondary students can discriminate between effective and ineffective teachers (Follman, 1992; Worrell & Kuterbach, 2001).

Limitations

There may be negative effects of feedback from student ratings on subsequent teacher behaviors (Follman, 1992—citing Eastridge, 1976).

Reliability and validity of student ratings

Benefits

Student ratings are a valid and reliable data source (Peterson et al., 2000; Worrell & Kuterbach, 2001).

Elementary and secondary student raters are no more impacted by validity concerns, such as halo and leniency effects, than adult raters (Follman, 1992, 1995).

Elementary and secondary students are as reliable as older, adult raters in rating teaching behaviors (Follman, 1992, 1995; Worrell & Kuterbach, 2001).

Elementary students, including preschoolers as young as four years old, can rate reliably (Follman, 1995).

Appropriately administered well-constructed instruments yield high—reliability results; subjective and correlational studies indicate positive validity of student rating results (Aleamoni, 1999).

Secondary and older primary education students provide ratings of teacher behavior that are stable, reliable, valid, and predictive for teacher evaluation (den Brok, Brekelmans, & Wubbels, 2004).

Student responses [in the Tripod survey] are reliable, valid, and stable over time at the classroom level (Ferguson, 2010).

Limitations

Reliability and validity of student ratings depend on the content, construction, and administration of student rating instruments (Goe et al., 2008; Little, Goe, & Bell, 2009; Kyriakides, 2005; Aleamoni, 1999).

Potential student rater bias may affect teacher rating (Follman, 1992—citing Eastridge, 1976).

The earliest age by which students can adequately rate their teachers is unresolved, and that must be considered when applying ratings by students who are below grade 3 (Follman, 1995).

Impact of student demographics

Benefits

Student rater demographic characteristics (for example, expected or obtained course grade, pupil and/or student gender, grade point average, subject matter) did not influence teacher ratings (Follman, 1992—citing Thompson, 1974, and Veldman & Peck, 1967).

Limitations

Student rater demographics and personality traits have a perceived significant influence on student ratings (Follman 1992—citing Eastridge 1976).

Student rating research includes widely inconsistent results regarding the correlation between student grades and instructor ratings (Aleamoni, 1999).

Use of student ratings

Benefits

Student ratings are more highly correlated with student achievement than principal ratings and teacher self-ratings (Kyriakides, 2005; Wilkerson et al., 2000).

Student ratings are a moderate predictor of student achievement (Worrell & Kuterbach, 2001).

Appropriate use of student ratings feedback by the teacher can result in an improved teaching and learning environment (Follman, 1992, 1995; Aleamoni, 1999).

Results of of student ratings can be collected anonymously (Little et al., 2009; Worrell & Kuterbach, 2001).

Student ratings require minimal training and are both cost- and time-efficient (Little et al., 2009; Worrell & Kuterbach, 2001).

Student ratings of teachers align with student achievement; teachers rated higher by students in instructional effectiveness align with students achieving at higher levels in that teacher’s class (MET Project, 2010a; Crow, 2011).

Limitations

Student ratings should not be the primary teacher evaluation instrument, but should be included in a comprehensive teacher evaluation process (Goe et al., 2008; Little et al., 2009; Peterson et al., 2000; Follman, 1992, 1995).

Confidentiality concerns in regard to protecting the anonymity of student raters must be addressed (McQueen, 2001).

Results of students ratings may be misinterpreted and misused (Kyriakides, 2005; Aleamoni, 1999).

Use of data by administrators for punitive purposes could result in teachers’ lack of support for the student ratings (Aleamoni, 1999).

Districts and States Using Student Surveys

Davis School District, the third largest school district in Utah, began revising its evaluation system to use multiple measures for assessing teacher effectiveness in 1995. Today, teachers select from among a group of data sources, including student surveys. The evaluation unit of the district’s educator assessment committee developed the student surveys, and it administers and scores them (Peterson, Wahlquist, Bone, Thompson, & Chatterton, 2001). In the Davis School District Educator Assessment System (EAS), there are three student surveys: seven questions for grades K–2, nine questions for grades 3–6, and ten questions for grades 7–12. All three surveys have the same choices of "no," "sometimes," and "yes"; the K–2 students respond through face drawings. The fourth question of the first two surveys states, "My teacher is a good teacher." Question 4 in the secondary student survey states, "This is a good teacher." If a teacher selects to use surveys, they are administered to one class at the K–6 grade level or one class period in the secondary level (Davis School District, 2009). Teachers in the district are supportive of the system allowing multiple measures: "One recent survey showed that 84.5 percent of our teachers liked the new techniques" (Peterson et al., 2001, p. 41).

Currently, many states are planning to use student surveys as one of multiple means for measuring teaching effectiveness. In 2012, Georgia is piloting the use of student surveys of instructional practice. The surveys will serve as one of the state’s three required measures of teacher effectiveness under its Teacher Keys Evaluation System. Surveys will be used at four grade spans: K–2, 3–5, 6–8, and 9–12 (Barge, 2011). Massachusetts educators will have the option of using student feedback as one of multiple measures for teacher evaluation beginning in the 2013–2014 school year. The Massachusetts Department of Education is in the process of selecting instruments for obtaining student feedback (Center for Education Policy and Practice, 2011). Georgia and Massachusetts are two of 11 states and the District of Columbia revising their teacher evaluation system with their U.S. Department of Education Race to the Top funding geared toward great teachers and leaders.

The most well known example of districts currently using student surveys to elicit student perceptions of the classroom instructional environment for a teacher’s evaluation is the Measures of Effective Teaching (MET) project, funded by the Bill & Melinda Gates Foundation. Six urban districts are participating in this project: Charlotte-Mecklenberg, NC; Dallas, TX; Denver, CO; Hillsborough County, FL; Memphis, TN; New York City, NY; and Pittsburgh, PA. The MET project uses the Tripod survey instrument—developed and revised over the past 10 years by Ron Ferguson of Harvard University and administered by Cambridge Education since 2007. The focus of the Tripod student perceptions survey is in the instructional quality of the classroom, with an assessment in three areas (thus the name tripod): content, pedagogy, and relationships. The Tripod surveys are designed for three levels of students: K–2, 3–5, and 6–12. Three thousand teachers are participating in the MET project (MET Project, 2010b; Tripod Project, 2011).

The use of multiple measures for evaluating teaching is dynamic, as new states and districts consider additional ways to gain a more comprehensive assessment of a complex task—teaching. Classroom-level student perception data are being considered for use by at least 11 states. In addition, some states are considering the inclusion of a community or parent survey or feedback, a teacher portfolio or evidence binder, classroom artifacts, goal setting, and other measures.

The following chart identifies states that have, as of this writing, proposed using student surveys or feedback as one of a list of recommended additional measures of teaching effectiveness. Thus far, Georgia is the only state that requires a student survey of instructional practice as one of three parts of teachers’ effectiveness measure score; the remaining states allow student surveys as an optional measure.

| State | Use of Student Surveys or Feedback as an Optional Measure | Community or Parent Survey | Review of Teacher Portfolio or Evidence Binder | Analysis of Classroom Artifacts | Goal Setting | Other or Not Specified |

|---|---|---|---|---|---|---|

|

Arizona |

♦ |

|||||

|

Colorado |

♦ |

♦ |

♦ |

♦ |

||

|

Delaware |

♦ |

♦ |

♦ |

♦ |

♦ |

♦ |

|

District of Columbia |

♦ |

♦ |

♦ |

|||

|

Florida |

♦ |

♦ |

||||

|

Georgia |

♦ |

|||||

|

Idaho |

♦ |

|||||

|

Illinois |

♦ |

|||||

|

Indiana |

♦ |

♦ |

||||

|

Iowa |

♦ |

|||||

|

Kentucky |

♦ |

♦ |

♦ |

♦ |

♦ |

♦ |

|

Louisiana |

♦ |

|||||

|

Maryland |

♦ |

|||||

|

Maine* |

♦ |

♦ |

♦ |

♦ |

♦ |

♦ |

|

Massachusetts |

♦ |

♦ |

♦ |

♦ |

||

|

Michigan |

♦ |

♦ |

♦ |

♦ |

♦ |

♦ |

|

Minnesota |

♦ |

♦ |

||||

|

New York |

♦ |

♦ |

♦ |

♦ |

♦ |

♦ |

|

North Carolina |

♦ |

♦ |

♦ |

|||

|

Ohio |

♦ |

♦ |

♦ |

|||

|

Oklahoma |

♦ |

|||||

|

Rhode Island |

♦ |

♦ |

♦ |

♦ |

♦ |

|

|

Tennessee |

♦ |

|||||

|

Washington |

♦ |

♦ |

♦ |

♦ |

♦ |

*Information for Maine provided by S. Harrison, (personal communication, December 29, 2011—Harrison is the Maine Schools for Excellence Project Director at the Maine Department of Education)

Sources: Crow, 2011; National Comprehensive Center for Teacher Quality, 2010; National Council on Teacher Quality, 2011

Examples of Student Surveys

Over the past decade, the Tripod Project has worked with over 300,000 students in the U.S., Canada, and China. In addition to the student survey used in the MET project, Tripod has a parent and teacher survey (Tripod Project, 2011). For the MET student perception survey, the student responds to four to eight statements categorized under each of the 7 C’s: care, control, clarify, challenge, captivate, confer, and consolidate. Over the years the survey items have been validated and refined to capture the essential elements of classroom-level teaching and learning. The initial findings of the MET project demonstrate that student perceptions can be one of multiple measures that reliably contribute to a balanced view of teacher performance and effectiveness (Ferguson, 2010). The MET paper, Learning About Teaching: Initial Findings from the Measures of Effective Teaching Project (2010c), provides a list of the survey questions.

The Questionnaire on Teacher Interaction (QTI) has been used in The Netherlands and Australia as well as the United States since the 1980’s. It has from 50 to 77 items (depending on the language and version) and uses a 5-point Likert scale from “never” to “always” (Wubbels, Levy, & Brekelmans, 1997). There are eight sectors in the survey: strict, leadership, helping/friendly, understanding, student freedom, uncertain, dissatisfied, and admonishing (Opdenakker, Maulana, & den Brok, 2011). The research on the QTI conducted over the years found the questionnaire to measure classroom climate reliably. It demonstrated that a teacher’s interpersonal skills affect both student achievement and attitude (Wubbels et al.). The QTI can be found in the book, Do You Know What You Look Like?: Interpersonal Relations in Education (Wubbels & Levy, 1993). Follman (1992) reported that other classroom-level tools, The Pupil Observation Survey (developed in the 1960’s, with 38 items) and the Student Evaluation of Teaching (developed in the 1970’s), were used frequently and found to be psychometrically sound.

In addition, many student perception surveys are available at the school level and may be useful as an evaluation measure for all teachers. The Gallup Student Poll, designed by Gallup, Inc., was first administered in 2009 to students in grades 5–12 after many years of review and research. The 20-item, 5-point Likert scale poll allows responses ranging from "strongly agree" to "strongly disagree"; it provides measurements of hope, engagement, and well-being and predicts "student success in academic and general youth development settings" (Lopez, Agrawal, & Calderon, 2010, p. 1), although it does not rate teachers individually. According to Lopez et al., a number of psychometric studies—conducted from 2008 to 2010—found positive correlations with related measures, internal consistency, and other psychometrically sound results. (The Lopez et al. report contains a copy of the Gallup Student Poll.) Currently, an individual teacher measurement to assess student perceptions at the classroom level is under development by Gallup (G. Gordon, personal communication, January 1, 2012—Gary Gordon, EdD is a strategic consultant with Gallup Consulting). Another schoolwide survey is the High School Survey of Student Engagement (Yazzie-Mintz, 2010), and there are many other available school surveys, which vary by the facet of school or student life being measured, for example, risk behaviors and school climate.

Using Student Perception Data Effectively

For student perceptions to be meaningfully accepted and used in high-stakes teacher evaluation, they must be used as only one of multiple measures. The student data should be collected more than once during the school year, and if used at the secondary level the survey should be administered to more than one class, and at all levels over multiple years, before any dramatic decisions are made (Ramsdell, 2011).

In addition to student perception data being used as part of a teacher evaluation system, the data should also be used to inform professional learning for teachers. The data from the surveys can by used by districts to look across their schools, and schools to look at their classrooms, as well as the classroom teacher to look at his or her students. Then the professional development can be targeted, and the progress of teachers monitored (Ramsdell, 2011; Crow, 2011). The main goal of the multiple measures for teaching effectiveness is to improve teaching and learning.

Summary

Student survey instruments can provide valuable insight into the teaching and learning environment of a classroom. "A professional teacher evaluation program would include the criteria of student achievement, and ratings by administrators, peers, and selves, as well as students who—in the final analysis—have the deepest, broadest, and most veridical perception of their teacher." (Follman, 1992, p.176). As such, student survey instruments can be a valuable component when designing a 21st century comprehensive teacher evaluation system.

State Highlights

The states served by the TXCC and the SECC were invited to contribute information on their work being done around teacher evaluations. The following highlights were provided by the respective state education agencies.

Alabama

Adopted by the Alabama State Board of Education in May 2009, then piloted in 2009-2010, EDUCATEAlabama is a formative system designed to provide information about an educator’s current level of practice within the Alabama Continuum for Teacher Development, which is based on the Alabama Quality Teaching Standards (AQTS), Alabama Administrative Code §290-3-3-.04. The AQTS constitutes the foundation of the teaching profession while the Continuum is a tool used to guide educator reflection, self-assessment, and goal setting for professional learning and growth.

Pursuant to the Alabama Administrative Code §290-3-2-.01(28), EDUCATEAlabama supports sustained and collaborative activities for educators designed to increase the academic achievement of all students. The activities must be consistent with the Alabama Standards for Professional Development, Alabama Administrative Code §290-4-3-.01(3). The activities must strengthen pedagogical knowledge and promote the acquisition of research-based strategies. For currently employed Alabama educators, the activities chosen to improve practice must by supported by data from local schools. Professional Learning Plans (PLP) must be approved by the employing superintendent.

EDUCATEAlabama At A Glance

Development

2009

A group of stakeholders, comprised of Alabama instructional leaders and educators, worked diligently in 2009 to develop the EDUCATEAlabama process. As a result, the EDUCATEAlabama Professional Learning Collaborative replaced the Professional Education Personnel Evaluation (PEPE) Program, as the formative evaluation process to meet the state’s 1988 requirement of personnel evaluation for Alabama’s educators.

Pilot

2009-2010

The EDUCATEAlabama process was piloted statewide in 2009-2010, providing a formative system designed to provide data about an educator’s current level of practice within the Alabama Continuum for Teacher Development based on the Alabama Quality Teaching Standards. As the process implies, EDUCATEAlabama is a professional learning collaborative. During the pilot, data were used by the instructional leader and educator to set expectations, goals, and a plan of action for educator professional growth and learning. Instructional leaders and educators received training in 2009-2010.

Transitioning Online

2010

In July 2010 the EDUCATEAlabama survey was completed by over 1,000 principals, assistant principals, evaluation coordinators, central office staff, and superintendents responsible for evaluating teachers, providing feedback on the EDUCATEAlabama 2009-2010 pilot year. In August 2010 a workgroup of Alabama practitioners refined the EDUCATEAlabama process based on feedback from the survey. Based on the workgroup’s recommendations, Alabama State Department of Education (SDE) and the Alabama Supercomputer Authority (ASA) developed, then implemented the current EDUCATEAlabama online process in December 2010.

EDUCATEAlabama

2011

Based on extensive practitioner feedback received in spring 2011, the SDE and ASA further refined the EDUCATEAlabama online process, which Alabama’s instructional leaders and educators are effectively implementing in the 2011-2012 school year. 2010 – 2011 EDUCATEAlabama Data Reports at state, system, school, and individual levels are providing valuable information regarding how educators self assess their current level of professional practice, dialogue with instructional leaders, plan their professional study, then implement a Professional Learning Plan, favorably impacting the students and families they serve. For further information regarding EDUCATEAlabama please visit the SDE Leadership and Evaluation website at http://www.alex.state.al.us/leadership. Once there, click on Educator Evaluations to find statewide data reports, training guides, tutorials, recorded webinars, cost-free professional study opportunities, and more.

Contact: Ms. Chris Wilson, Project Administrator, EDUCATEAlabama at educatealabama@asc.edu.

Georgia

Teacher Keys Evaluation System

2011-2012 Pilot

During January 2012 through May 2102, as part of the Race to the Top Initiative, Georgia will pilot the Teacher Keys Evaluation System (TKES). TKES is a complete evaluation system that will allow Georgia to ensure consistency and comparability across districts based on a common definition of teacher effectiveness. For 2012, Georgia has partnered with 26 school systems throughout the state to pilot TKES. Some systems will pilot TKES with a select number of schools participating while others will pilot the system in 10% of the schools within their respective districts.

The primary purpose of TKES is to do the following:

- optimize student learning and growth;

- improve the quality of instruction by ensuring accountability for classroom performance and teacher effectiveness;

- contribute to successful achievement of the goals and objectives defined in the vision, mission, and goals of Georgia’s public schools;

- provide a basis for instructional improvement through productive teacher performance appraisal and professional growth; and

- implement a performance evaluation system that promotes collaboration between the teacher and evaluator and promotes self-growth, instructional effectiveness, and improvement of overall job performance.

TKES consists of three components that contribute to an overall Teacher Effectiveness Measure (TEM).

- The first component is Teacher Assessment on Performance Standards (TAPS). TAPS provides evaluators with a qualitative, rubrics-based evaluation method that measures teacher performance and relates to quality performance standards, which refer to the major duties performed by the teacher.

- The second component, Student Growth and Academic Achievement, looks different for teachers of tested subjects and teachers of non-tested subjects. For teachers of tested subjects, this component consists of a student growth percentile/value added measure based on state assessment performance. Teachers of non-tested subjects are measured by a GaDOE-approved Student Learning Objective that utilizes district achievement growth measures.

- Surveys of Instructional Practice is the third component. Student surveys are developed at four grade spans (K-2, 3-5, 6-8, and 9-12) to provide information about students’ perceptions of how teachers are performing.

Each component contributes to the overall Teacher Effectiveness Measure (TEM). A definite formula for the weighting of each component will be determined at the completion of the pilot.

South Carolina

ADEPT

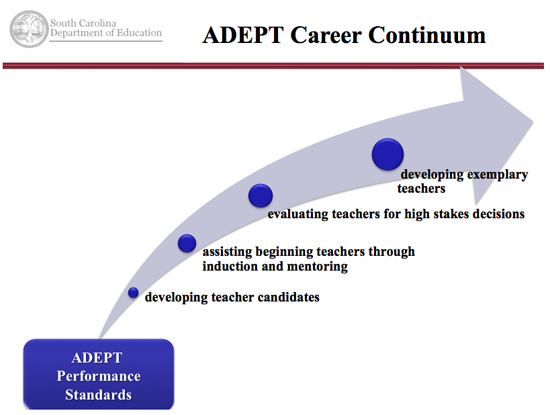

South Carolina’s statewide system for Assisting, Developing, and Evaluating Professional Teaching (ADEPT) is built on the knowledge that effective teaching is fundamental to student success. To that end, the two-fold purpose of ADEPT is (1) to promote teacher effectiveness (thus, the "A" and "D" components of the ADEPT system) and (2) to provide quality assurance and accountability via valid, reliable, consistent, and fair evaluations of teacher performance and effectiveness (i.e., the "E" component of the system).

ADEPT is referred to as a system for good reason: the word system comes from the Latin systema meaning to cause to stand. By continuously assessing and analyzing their professional practices as well as their impact on the learning, achievement, and overall well-being of their students, educators reach successively higher levels of efficacy as they progress through the various stages of the ADEPT career continuum.

Central to the ADEPT system is a set of expectations for what teaching professionals should know, be able to do, and assume responsibility for accomplishing on an ongoing basis. These expectations, called the ADEPT Performance Standards (APSs), are the linchpins that connect all stages of a teacher’s career. Currently, ADEPT includes four parallel sets of APSs for classroom-based teachers, school guidance counselors, library media specialists, and speech-language therapists, respectively. Plans are underway to develop additional sets of standards for other groups of educators, including teachers in virtual/cyber school settings (e-teachers), school psychologists and teacher leaders.

As part of the standards development process, all APSs are aligned with current, nationally recognized sets of professional standards. Most recently, South Carolina convened a statewide task force to undertake a crosswalk study between the APSs for classroom-based teachers and thirteen nationally recognized teacher performance standards, including the recently revised InTASC Model Core Teaching Standards. These crosswalks will drive subsequent revisions to the APSs. Current attention is being given to strengthening the student growth standard, including the addition of a value-added component. Additionally, the state is in the process of developing rubrics that will move beyond the current bimodal rating to a more descriptive four-level rating system for each of the APSs.

The APSs form the basis for the four major components of the ADEPT system: (1) developing teacher candidates, (2) assisting beginning teachers, (3) evaluating teacher performance and effectiveness, and (4) developing exemplary teachers.

ADEPT for Teacher Candidates

This initial phase of the ADEPT system is designed to help preservice teachers develop the knowledge, skills, and dispositions necessary to become effective teachers—that is, to promote student learning, achievement, and well-being. In order to accomplish this goal, the ADEPT office collaborates with all teacher preparation units at institutions of higher education (IHEs)—representing 31 colleges and universities—in the state to help them effectively integrate the ADEPT performance standards throughout candidates’ course work, field experiences, and student teaching.

ADEPT for Teacher Candidates includes a feedback loop that provides IHEs with data on the performance and effectiveness of their graduates. The ADEPT formal evaluation results for all graduates who are practicing teachers in the state’s public schools are reported to each IHE. From these data, the ADEPT formal evaluation "pass rate" is calculated and included in the institution’s Title II—Higher Education report card as an indicator of the quality of the preparation program. For program improvement purposes, each teacher’s ADEPT results are disaggregated so that IHEs can analyze their graduates’ performance overall as well as on a standard-by-standard basis.

Assisting Beginning Teachers

During the first year of practice, each beginning teacher receives assistance and support through an induction program provided by the school district as well as from a qualified mentor who is individually matched and assigned to the teacher. Districts may also assign qualified mentors to second- or third-year teachers who need additional coaching and assistance. To help create and maintain a comprehensive network of support for beginning teachers, the ADEPT office coordinates this statewide initiative, provides technical assistance to schools and school districts, and develops training for mentors. The goal for our beginning teachers is to maximize their teaching effectiveness and increase the likelihood that they will remain in the teaching profession.

Evaluating Teacher Performance and Effectiveness

The high-stakes formal evaluation component of the ADEPT system focuses on quality assurance. The purpose of formal evaluation is to examine the relationship between educator performance and student outcomes from the dual perspectives of accountability and improvement. Regardless of whether a teacher has matriculated through a traditional preparation program, entered the profession via an alternative route, or entered our state’s teaching force via reciprocity, successful completion of an ADEPT formal evaluation is the required, outcomes-based gateway to certificate and contract advancement.

The statewide ADEPT formal evaluation requirements include

- multiple trained and certified evaluators. All evaluators must hold ADEPT evaluator certificates that require (1) a valid South Carolina professional teaching certificate, (2) successful completion of the statewide evaluator training program, and (3) successful completion of the state’s online evaluator examination. The ADEPT office coordinates the evaluator training via a train-the-trainer model; additionally, the ADEPT office is solely responsible for the direct training and certification of all ADEPT trainers;

- multiple sources of data. For example, the required data sources for classroom-based teachers include (1) multiple classroom observations, (2) teacher reflections following each observation, (3) analyses of student progress and achievement using a unit work sample process, (4) long-range instructional plans, (5) professional performance reviews, and (6) professional self-assessments and goal-setting; and

- uniform criteria for successfully completing the evaluation.

All beginning teachers must be formally evaluated in their second or third year of teaching and must successfully complete a year-long formal evaluation in order to be eligible to advance to a professional certificate. Teachers who fail to meet the formal evaluation requirements after two attempts must have their certificates suspended by the state for a minimum two-year period. Throughout the remainder of their careers, experienced teachers may be scheduled for formal evaluation whenever performance weaknesses are evidenced.

School districts use ADEPT formal evaluation results to help make teacher employment and contract decisions as well as to identify individual and overarching professional development needs. Institutions of higher education receive formal evaluation data on the performance of their graduates and use this information to help guide their programmatic decision-making.

Developing Exemplary Teachers

The most advanced stage of the ADEPT system, research and development goals-based evaluation (GBE), focuses on continual professional development through collaborative action research and inquiry. As members of Communities Advancing Professional Practices (CAPPs), teachers identify and address issues that affect student performance and the school community. The process involves (1) identifying specific needs of the learners or the school community; (2) identifying or developing promising practices that address these needs; (3) implementing the practices; (4) determining the impact of these practices on student learning and the school community; and (5) sharing the findings and implications with the larger professional community. Educators are eligible to receive certificate renewal credits for their GBE participation, pending review and approval by their respective supervisors.

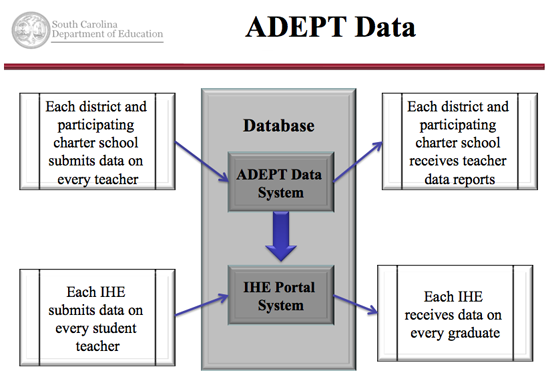

ADEPT Data

The South Carolina Department of Education hosts two secure web-based systems to collect and report ADEPT data on an

annual basis.

Each year, school districts input ADEPT information on every teacher into the ADEPT Data System (ADS). This information includes

- the teacher’s contract level and ADEPT process (e.g., induction/mentoring, formal evaluation, GBE) for the year;

- the teacher’s ADEPT results for the year, including the results for each APS if the teacher was formally evaluated;

- the teacher’s hiring status for the following year (e.g., rehired, not rehired, resigned, retired, workforce reduction); and

- the teacher’s recommended contract level for the following year.

An aggregated report is presented annually to the State Board of Education and published to the State Department of Education’s website. Additionally, this information is included in a permanent ADEPT history that is generated for every teacher. These ADEPT histories may be accessed by the teacher, the teacher’s employing school district, and any public school district in South Carolina to which the teacher applies.

Institutions of higher education submit annual ADEPT results on their student teachers via the IHE Portal System. In turn, IHEs receive ADEPT annual reports on their graduates via this same system.

ADEPT: The Next Generation

South Carolina is poised to move forward in its ongoing upgrades to the ADEPT system to help ensure that all teachers are competent, caring, and effective in promoting the learning, achievement, and ultimate success of our students.

References

Aleamoni, L. M. (1999). Student rating myths versus research facts from 1924 to 1998. Journal of Personnel Evaluation in Education, 13(2), 153–166.

Barge, J. D. (2011, September). Teacher and leader evaluation systems. PowerPoint presentation to the Georgia RT3 Great Teachers and Leaders Program, Atlanta, GA.

Center for Education Policy and Practice, Massachusetts Teachers Association. (2011). Massachusetts Department of Elementary and Secondary Education educator evaluation regulations. Boston, MA: Center for Education Policy and Practice.

http://www.massteacher.org/advocating/Evaluation.aspx

http://www.massteacher.org/news/archive/2011/05-26.aspx

Crow, T. (2011). The view from the seats: Student input provides a clearer picture of what works in schools. JSD, 32(6), 24–30. Retrieved from http://www.learningforward.org/news/articleDetails.cfm?articleID=2379.

Davis School District. (2009). Educator Assessment System: Acknowledging and honoring quality performance. Farmington, UT: Davis School District. Retrieved from http://www.nctq.org/docs/72-08.pdf.

Den Brok, P., Brekelmans, M., & Wubbels, T. (2004). Interpersonal teacher behaviour and student outcomes. School Effectiveness and School Improvement, 15(3-4), 407–442.

Ferguson, R. F. (2010). Student perceptions of teaching effectiveness. Discussion brief. Cambridge, MA: National Center for Teacher Effectiveness and the Achievement Gap Initiative. Retrieved from http://www.gse.harvard.edu/ncte/news/Using_Student_Perceptions_Ferguson.pdf.

Follman, J. (1992). Secondary school students’ ratings of teacher effectiveness. The High School Journal, 75(3), 168–178.

Follman, J. (1995). Elementary public school pupil rating of teacher effectiveness. Child Study Journal, 25(1), 57–78.

Goe, L., Bell, C., & Little, O. (2008). Approaches to evaluating teacher effectiveness: A research synthesis. Washington, DC: National Comprehensive Center for Teacher Quality. Retrieved from http://www.tqsource.org/publications/EvaluatingTeachEffectiveness.pdf.

Kyriakides, L. (2005). Drawing from teacher effectiveness research and research into teacher interpersonal behaviour to establish a teacher evaluation system: A study on the use of student ratings to evaluate teacher behavior. Journal of Classroom Interaction, 40(2), 44–66. Retrieved from http://www.eric.ed.gov/PDFS/EJ768695.pdf.

Little, O., Goe, L., & Bell, C. (2009). A practical guide to evaluating teacher effectiveness. Washington, DC: National Comprehensive Center for Teacher Quality. Retrieved from http://www.tqsource.org/publications/practicalGuide.pdf.

Lopez, S. J., Agrawal, S., & Calderon, V. J. (2010, August). The Gallup Student Poll technical report. Washington, DC: Gallup, Inc. Retrieved from http://www.gallup.com/consulting/education/148115/gallup-student-poll-technical-report.aspx

McQueen, C. (2001). Teaching to win. Kappa Delta Pi Record, 38(1), 12–15.

MET Project. (2010a). Student perceptions and the MET Project. Seattle, WA: Bill & Melinda Gates Foundation. Retrieved from http://www.metproject.org/downloads/Student_Perceptions_092110.pdf.

MET Project. (2010b). Working with teachers to develop fair and reliable measures of effective teaching. Seattle, WA: Bill & Melinda Gates Foundation. Retrieved from http://www.metproject.org/downloads/met-framing-paper.pdf.

MET Project. (2010c). Learning about teaching: Initial findings from the Measure of Effective Teaching project (Research paper). Seattle, WA: Bill & Melinda Gates Foundation. Retrieved from http://www.metproject.org/downloads/Preliminary_Findings-Research_Paper.pdf.

Murphy, P. K., Delli, L., & Edwards, M., (2004, Winter). The good teacher and good teaching: Comparing beliefs of second-grade students, preservice teachers, and inservice teachers. The Journal of Experimental Education, 72(2), 69–92.

National Comprehensive Center for Teacher Quality. (2010). State database of teacher evaluation policies—Selecting measures: Additional measures of teacher performance (Website). Chicago IL: Author. Retrieved from http://resource.tqsource.org/stateevaldb/.

National Council on Teacher Quality. (2011). State of the states: Trends and early lessons on teacher evaluation and effectiveness policies. Washington, DC: Author. Retrieved from http://www.nctq.org/p/publications/docs/nctq_stateOfTheStates.pdf.

Opdenakker, M-C., Maulana, R., & den Brok, P. (2011). Teacher-student interpersonal relationships and academic motivation within one school year: Developmental changes and linkage. School Effectiveness and School Improvement, 1–25. doi: 10.1080/09243453.2011.619198

Peterson, K. D., Wahlquist, C., & Bone, K. (2000). Student surveys for school teacher evaluation. Journal of Personnel Evaluation in Education, 14(2), 135–153.

Peterson, K. D., Wahlquist, C., Bone, K., Thompson, J., & Chatterton, K. (2001). Using more data sources to evaluate teachers. Educational Leadership, 58(5), 40–43.

Ramsdell, R. (2011, December). Enhancing teacher evaluation and feedback systems through effective classroom observation and Tripod student surveys (PowerPoint slides). Paper presented at the Teacher Evaluation That Works Conference, Cromwell, CT. Retrieved from http://www.capss.org/uploaded/Hard_Copy_Documents/Teacher_Evaluation_That_Works/

2011_11_28_Cambridge_Ed_Fry_Ramsdell_TES_Summary_CT_Session_Handout.pdf.

Tripod Project. (2011). Tripod survey assessments: Multiple measures of teaching effectiveness and school quality. Westwood, MA: Cambridge Education. Retrieved from http://www.tripodproject.org/index.php/about/about_background.

Wilkerson, D. J., Manatt, R. P., Rogers, M. A., & Maughan, R. (2000). Validation of student, principal, and self-ratings in 360 Degree Feedback for teacher evaluation. Journal of Personnel Evaluation in Education, 149(2), 179–192.

Worrell, F. C., & Kuterbach, L. D. (2001). The use of student ratings of teacher behaviors with academically talented high school students. Journal of Secondary Gifted Education, 12(4), 236–147.

Wubbels, T., Levy, J., & Brekelmans, M. (1997). Paying attention to relationships. Educational Leadership, 54(7), 82–86.

Wubbels, T., & Levy, J. (Eds.). (1993). Do you know what you look like?: Interpersonal relations in education. London: Falmer Press.

Yazzie-Mintz, E. (2010). Charting the path from engagement to achievement: A report on the 2009 High School Survey of Student Engagement. Bloomington, IN: Center for Evaluation and Education Policy.

Additional Resources

The reader may find the resources listed below to be of value in examining the evaluation of teacher effectiveness.

Gallagher, C., Rabinowitz, S., & Yeagley, P. (2011). Key considerations when measuring teacher effectiveness: A framework for validation teachers’ professional practices (AACC Report). San Francisco and Los Angeles, CA: Assessment and Accountability Comprehensive Center. Retrieved from http://www.aacompcenter.org/cs/aacc/download/rs/26517/aacc_2011_tq-report.pdf?x-r=pcfile_d.

Goe, L., Holdeheide, L., Miller, T. (2011). A practical guide to designing comprehensive teacher evaluation systems: A tool to assist in the development of teacher evaluation systems. Washington, DC: National Comprehensive Center for Teacher Quality. Retrieved from http://www.tqsource.org/publications/practicalGuideEvalSystems.pdf.

Holdheide, L. R., Goe, L., Croft, A., & Reschly, D. J. (2010). Challenges in evaluating special education teachers and English language learner specialists. Research & Policy Brief. Washington, DC: National Comprehensive Center for Teacher Quality. Retrieved from http://www.eric.ed.gov/PDFS/ED520726.pdf.

Learning Point Associates. (2011). Evaluating teacher effectiveness: Emerging trends reflected in the State Phase 1 Race To The Top applications. Chicago, IL: Learning Point Associates. Retrieved from http://www.learningpt.org/pdfs/RttT_Teacher_Evaluation.pdf.

Memphis City Schools. (2011). Memphis Teacher Effectiveness Initiative. Memphis TN: Memphis City Schools. Retrieved from http://www.mcstei.com/strategies/define/.

MET Project. (2010). A composite measure of teacher effectiveness. Seattle, WA: Bill & Melinda Gates Foundation. Retrieved from http://www.metproject.org/downloads/Value-Add_100710.pdf.

Potemski, A., Baral, M., & Meyer C. (2011). Alternative measures of teacher performance. Washington, DC: National Comprehensive Center for Teacher Quality. Retrieved from http://www.tqsource.org/pdfs/TQ_Policy-to-PracticeBriefAlternativeMeasures.pdf.

|

This briefing paper is one of several prepared by the Texas Comprehensive Center at SEDL. These papers address topics on current education issues related to the requirements and implementation of the No Child Left Behind Act of 2001. This service is paid for in whole or in part by the U.S. Department of Education under grant # S283B050020. The contents do not, however, necessarily represent the policy of the U.S. Department of Education or of SEDL, and one should not assume endorsement by either entity. Copyright© 2012 by SEDL. All right reserved. No part of this document may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopy, recording, or any information storage and retrieval system, without permission in writing from SEDL. Permission may be requested by submitting an copyright request form online www.sedl.org/about/copyright_request.html. After obtaining permission as noted, users may need to secure additional permissions from copyright holders whose work SEDL included to reproduce or adapt for this document. |

|

Wesley Hoover, SEDL President and CEO |

|

Briefing Paper Team: Jackie Burniske, Program Associate; Debra Meibaum, Program Associate; Shirley Beckwith, Communications Associate; Jesse Mabus, Information Specialist; Haidee Williams, Project Director. |